There’s a lot of buzz in the media right now about a new artificial-intelligence chatbot that anybody can try out. It’s ChatGPT, from a project called OpenAI. I tried it out. It’s interesting for about ten minutes, at which point it becomes apparent that, though it’s a beautiful piece of software engineering, its usefulness is limited.

We’re not in the slightest danger of having artificial intelligence take our jobs — with the possible exception of customer service jobs of the sort outsourced to Asia, jobs in which people with almost no knowledge read from scripts, following prompts on a computer screen. ChatGPT’s English is vastly better, though.

The only thing that I find truly impressive about ChatGPT is its ability to parse and compose English sentences — natural language processing — something that programmers have been working on for many years. Having parsed your question, ChatGPT’s response will sound like reading to you from an encyclopedia.

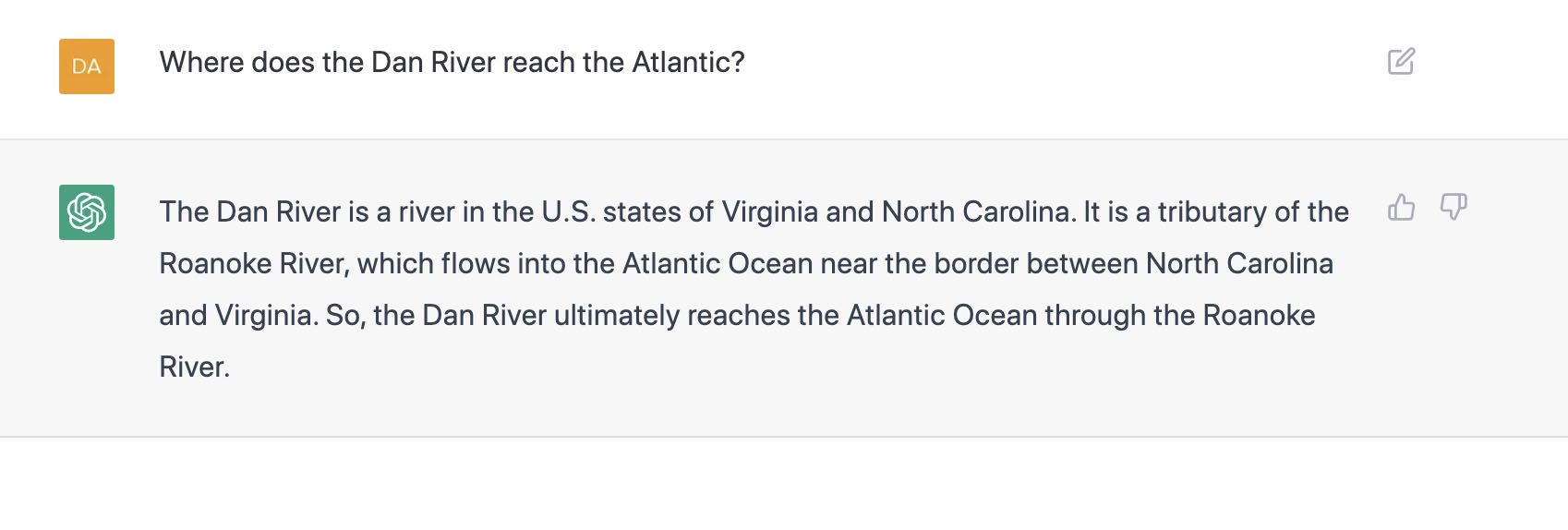

Here’s an example:

That is a very good answer. During my chat with ChatGPT, all the English was perfectly composed — no gaffes and no grammatical errors. That’s impressive. Still, the “intelligence” of ChatGPT still just boils down to reading to you from an encyclopedia. ChatGPT is nothing more than a sophisticated search prompt that responds with a well-composed English sentence or two rather than giving you links to online articles.

I ignore all the hype about artificial intelligence. AI, ultimately, in my view, will be more useful than other tech wonders such as Bitcoin. But it’s not going to replace real knowledge based on understanding and experience. Will there be military uses for artificial intelligence? There almost certainly will be, I would think. But the danger would be in human beings using computer algorithms to control weapons aimed at other human beings, not in computers becoming smarter than humans and taking over.

It was long ago, 1989, when Roger Penrose, in a book named The Emperor’s New Mind, argued that the human mind and human consciousness are not algorithmic. That is, according to Penrose, no digital computer, no matter how complicated and sophisticated, will ever be able to do what the human mind does. Penrose’s theory is that the brain has some as yet unknown mechanism that makes use of quantum mechanics and quantum entanglement. Quantum computers, if they can be built, may open up non-algorithmic possibilities for artificial intelligence. But that’s a long way off. Penrose, I believe, is the Einstein of our time. I am persuaded by his argument. AI programmers think Penrose is wrong. But of course they would.

A big limitation in ChatGPT is that, if you ask about current events, you’ll be told that ChatGPT has no knowledge of current events. It’s not hard to see why. Feeding information to ChatGPT’s database must have been difficult, in that the information surely had to be pre-parsed somehow into a specialized form in which ChatGPT could make use of it. That took time. I can’t imagine that it would be possible — not yet, anyway — to just instruct ChatGPT to go online and read everything on Wikipedia. A future AI that constantly updates its database the way Google constantly updates its search engine would be far more useful and would be a fine research tool. If the ChatGPT engine is open source, then I can imagine programmers doing wondrous — though specialized — things with it. I also can imagine, in the future, research engines that have been fed the contents of entire university libraries. That would be a fantastic tool, but it would hardly be a machine that would be capable of taking over the world.

Update: The Atlantic has a piece today by a high school English teacher, “The End of High School English.” I wasn’t quite sure what the concern is. Is it concern that students will use bots to write their papers? Or is it that, with bots available, students no longer need to learn to write?